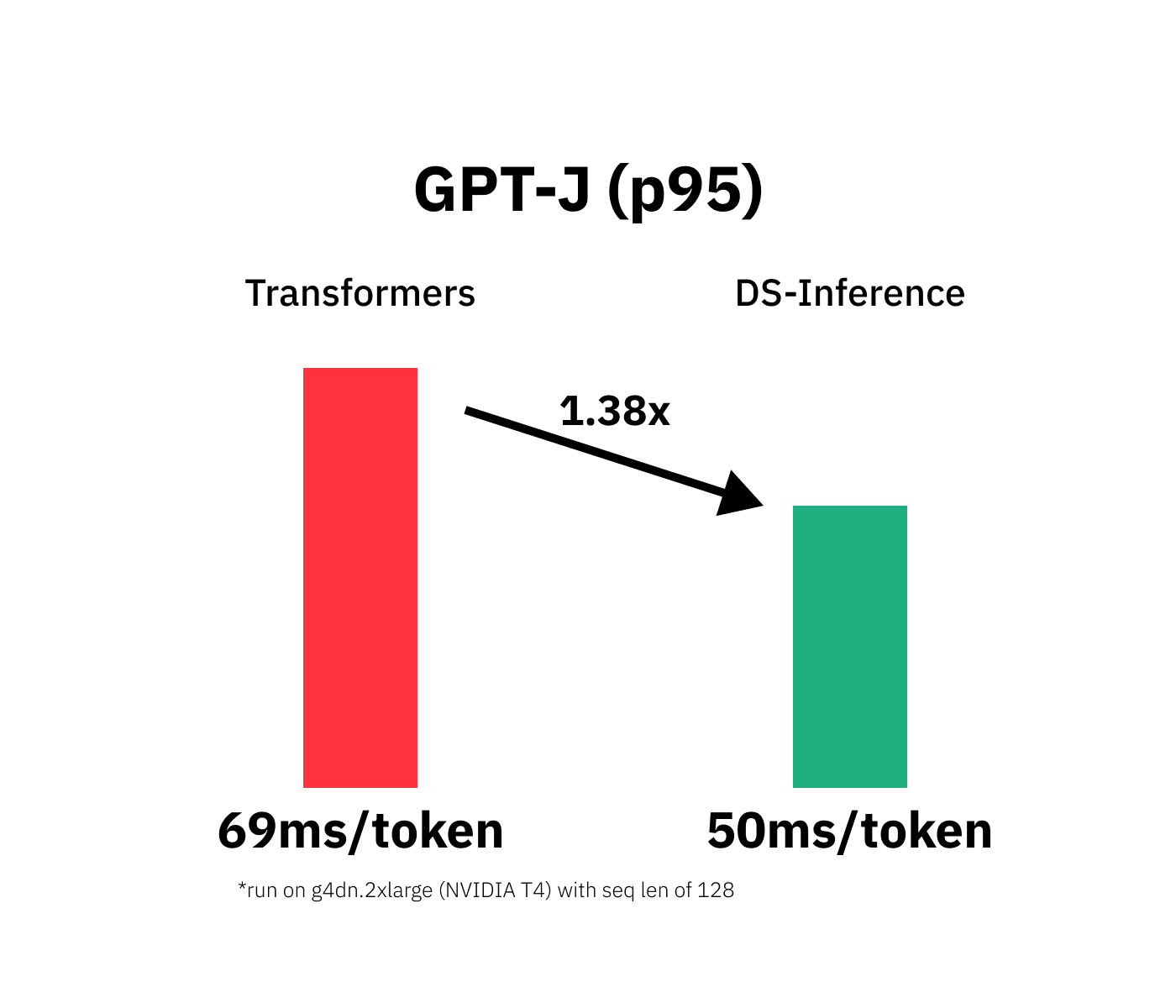

Reduce inference costs on Amazon EC2 for PyTorch models with Amazon Elastic Inference | AWS Machine Learning Blog

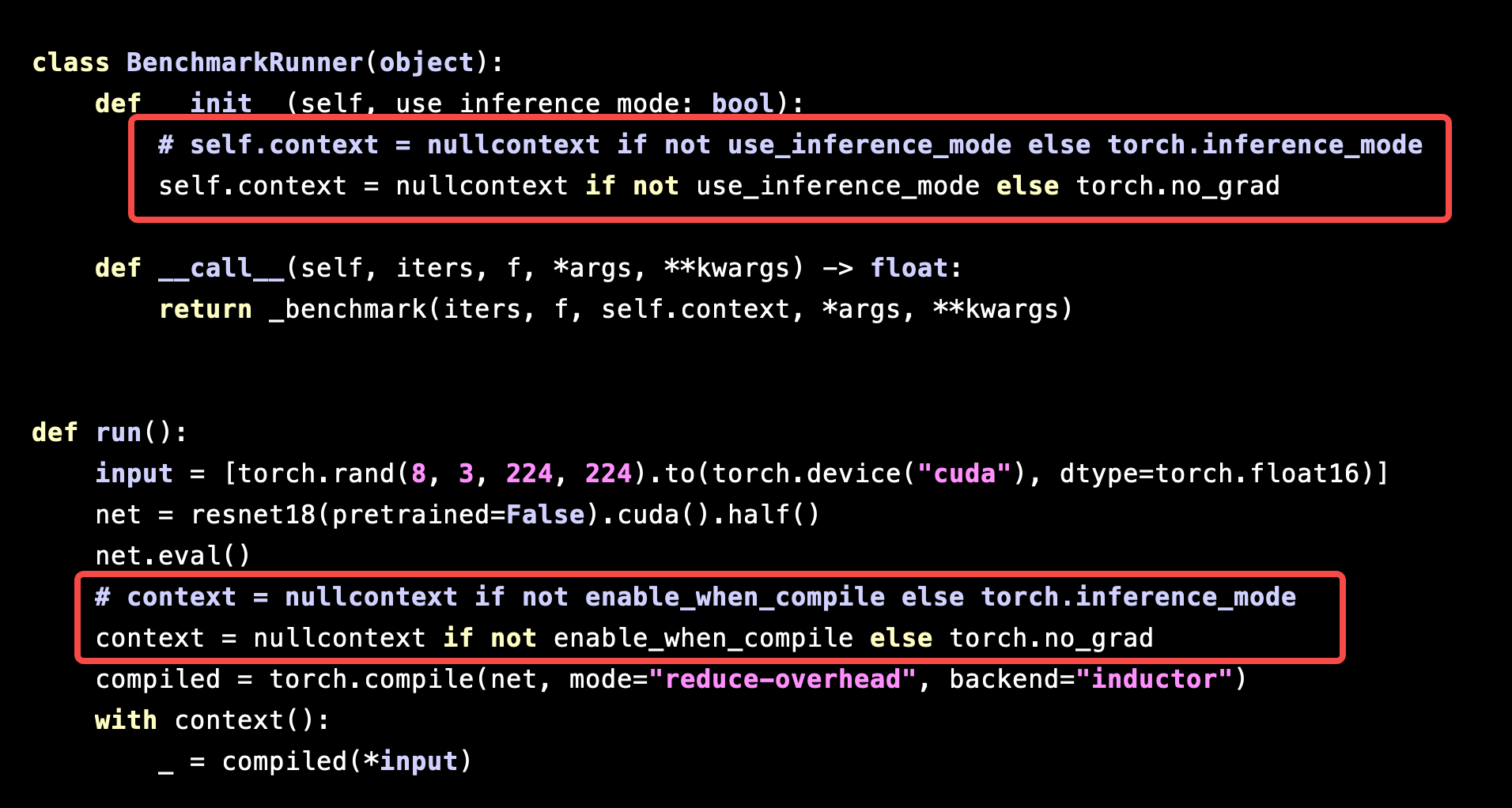

Performance of `torch.compile` is significantly slowed down under `torch.inference_mode` - torch.compile - PyTorch Forums

Achieving FP32 Accuracy for INT8 Inference Using Quantization Aware Training with NVIDIA TensorRT | NVIDIA Technical Blog

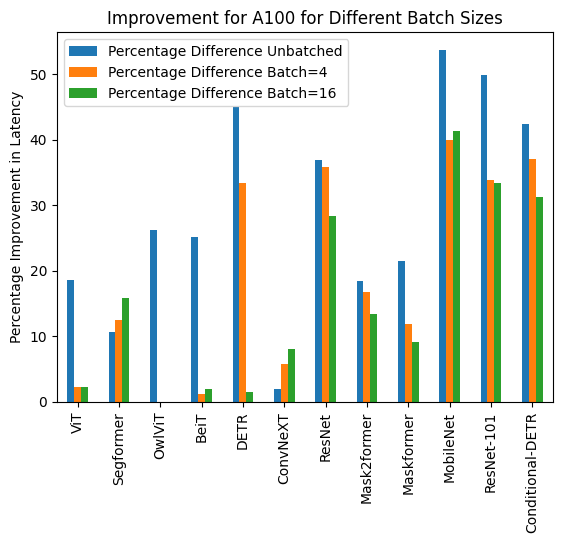

TorchServe: Increasing inference speed while improving efficiency - deployment - PyTorch Dev Discussions

Inference mode complains about inplace at torch.mean call, but I don't use inplace · Issue #70177 · pytorch/pytorch · GitHub

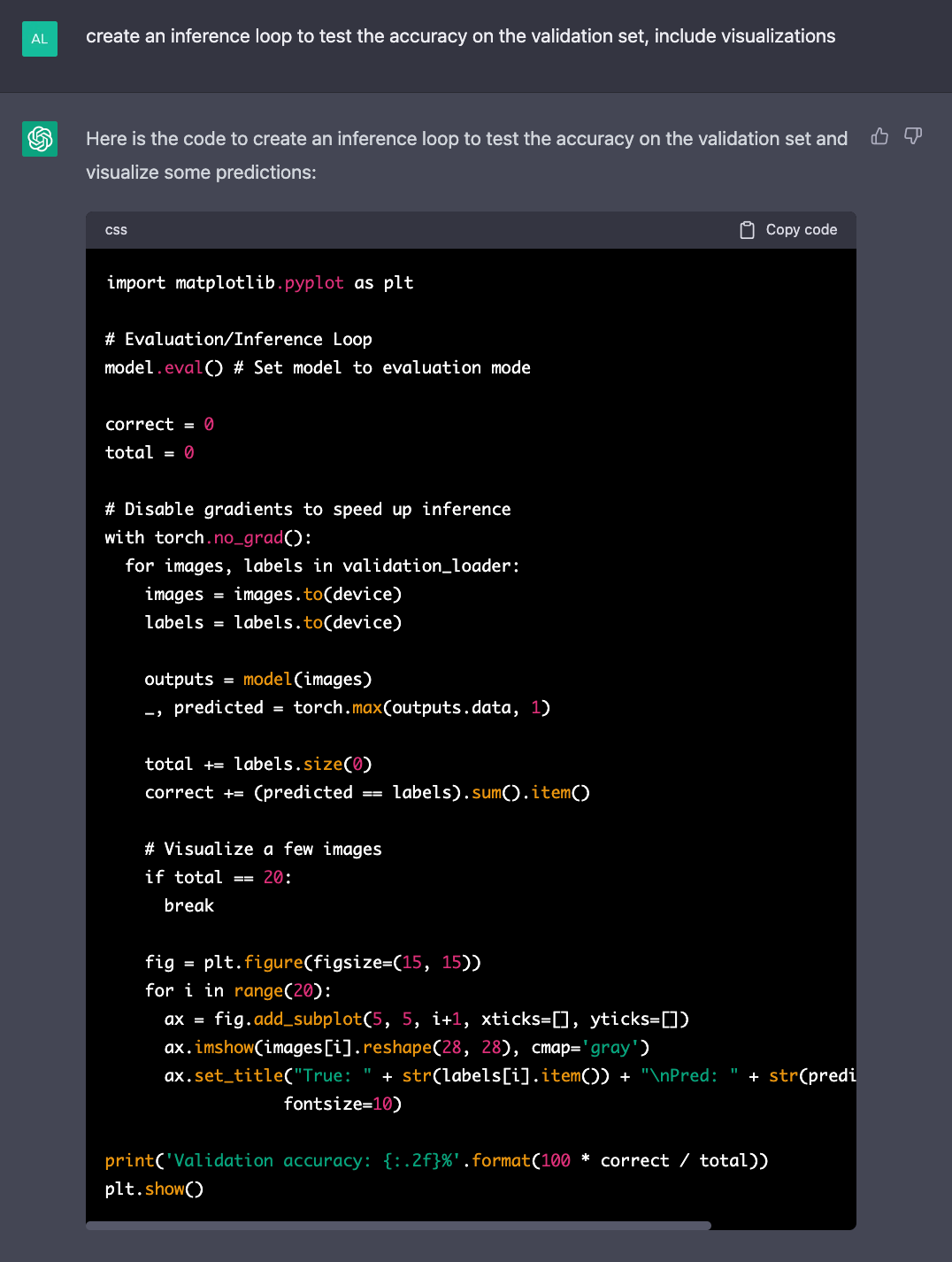

Abubakar Abid on X: "3/3 Luckily, we don't have to disable these ourselves. Use PyTorch's 𝚝𝚘𝚛𝚌𝚑.𝚒𝚗𝚏𝚎𝚛𝚎𝚗𝚌𝚎_𝚖𝚘𝚍𝚎 decorator, which is a drop-in replacement for 𝚝𝚘𝚛𝚌𝚑.𝚗𝚘_𝚐𝚛𝚊𝚍 ...as long you need those tensors for anything

TorchServe: Increasing inference speed while improving efficiency - deployment - PyTorch Dev Discussions